Public Service Announcement on Data Center Architecture from Google

I don’t know about you, but I have never confused Google with an eleemosynary (relating to or supported by charity) institution. After all and this is not meant to be read as a bad thing, in fact quite the contrary, they are in it for the money. And, as we all are well aware they are very good at monetizing their assets for all kinds. It was thus refreshing, dare I say surprising, to read a recent Google Research Blog, Pulling Back the Curtain on Google’s Network Infrastructure, by Amin Vahdat, Google Fellow.

You read the title correctly. As Vahdat states:

Ten years ago, we realized that we could not purchase, at any price, a datacenter network that could meet the combination of our scale and speed requirements. So, we set out to build our own datacenter network hardware and software infrastructure… From relatively humble beginnings, and after a misstep or two, we’ve built and deployed five generations of datacenter network infrastructure. Our latest-generation Jupiter network has improved capacity by more than 100x relative to our first generation network, delivering more than 1 petabit/sec of total bisection bandwidth. This means that each of 100,000 servers can communicate with one another in an arbitrary pattern at 10Gb/s.

What distinguishes the blog is that this is no exercise in Google patting itself on the back. It is a full reveal and after reading through the blog and various links this is an emperor that not only has clothes but is a fashion setter whose tips you are going to wish to contemplate. This really is something to bookmark and spend some time with if you are in the Transforming Network Infrastructure Community.

What is particularly fascinating is not just how fast and how far we have come but the obstacles that Google overcame to get from there to here, and how this has laid the groundwork for it to move ahead as the data tsunami it is in no small measure generating continues to grow exponentially, and as Google looks to leverage its data center operations expertise with its Google Cloud Platform in its battles with Amazon Web Services and Microsoft.

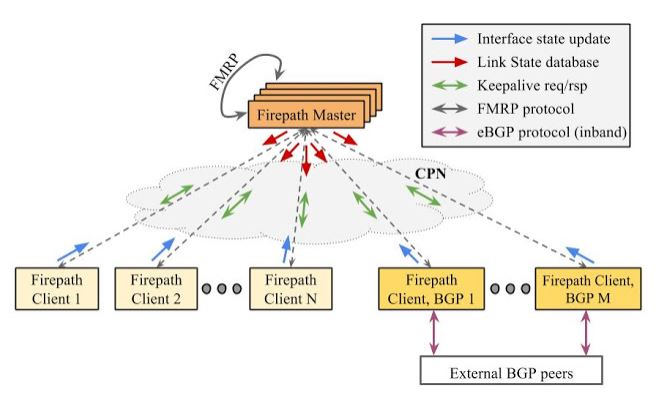

As a teaser for reading the blog and supporting materials, below is Vahdat’s visualization of how Google, “Transformed routing from a pair-wise, fully distributed (but somewhat slow and high overhead) scheme to a logically-centralized scheme under the control of a single dynamically-elected master…”

The blog also goes over the history of how all of this happened as well as the fact that breakthroughs for data center transformation included all aspects of Network, Compute and Storage. As noted, this is not just educational reading as a standalone piece, but it also contains, links to extremely insightful additional technical information.

Realties are that while many companies are certainly aspirational in terms of hopefully being able to have and monetize the type of hyperscale data centers of Google, few will need to and are not likely to literally go through the steps (and triumphs) of growing their own. That said, however, what has become interesting in the increasingly open source and more transparent world of data center architecting is that what happens in Google, and for that matter Facebook and other massive hyperscale data center operators as well, is that sharing with the community at large is now seen as a good thing.

This is not to be confused as charity, although it is a public service, since as Vahdat does point out Google has ulterior motives for being so generous. Nonetheless, history says that what happened with Google has set trends that others have embraced and as far as reference material for your transformation this is a real find.

Edited by Maurice Nagle